In the new update of DB2, released Friday, IBM has added a set of acceleration technologies, collectively code-named BLU, that promise to make the venerable database management system (DBMS) better suited for running large in-memory data analysis jobs.

In the new update of DB2, released Friday, IBM has added a set of acceleration technologies, collectively code-named BLU, that promise to make the venerable database management system (DBMS) better suited for running large in-memory data analysis jobs.

“BLU has significant benefits for the analytic and reporting workloads,” said Tim Vincent, IBM’s vice president and chief technology officer for information management software.

Developed by the IBM Research and Development Labs, BLU (a development code name that stood for Big data, Lightening fast, Ultra easy) is a bundle of novel techniques for columnar processing, data deduplication, parallel vector processing and data compression.

The focus of BLU was to enable databases to be “memory optimised,” Vincent said. “It will run in-memory, but you don’t have to put everything in-memory.” The BLU technology can also eliminate the need for a lot of hand-tuning of SQL queries to boost performance.

Because of BLU, DB2 10.5 could speed data analysis by 25 times or more, IBM claimed. This improvement could eliminate the need to purchase a separate in-memory database – such as Oracle’s TimesTen – for speedy data analysis and transaction processing jobs. “We’re not forcing you from a cost model perspective to size your database so everything fits in-memory,” Vincent said.

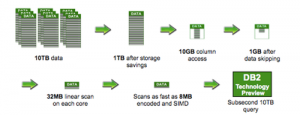

On the Web, IBM provided an example of how a 32-core system using BLU technologies could execute a query against a 10TB data set in less than a second.

“In that 10TB, you’re [probably] interacting with 25 percent of that data on day-to-day operations. You’d only need to keep 25 percent of that data in-memory,” Vincent said. “You can buy today a server with a terabyte of RAM and 5TB of solid state storage for under $35,000.”

Also, using DB2 could cut the labour costs of running a separate data warehouse, given that the pool of available database administrators is generally larger than that of data warehouse experts. In some cases, it could even serve as an easier-to-maintain alternative to the Hadoop data processing platform, Vincent said.

Among the new technologies is a compression algorithm that stores data in such a way that, in some cases, the data does not need to be decompressed before being read. Vincent explained that the data is compressed in the order in which it is stored, which means predicate operations, such as adding a WHERE clause to a query, can be executed without decompressing the dataset.

Another time-saving trick: the software keeps a metadata table that lists the high and low key values for each data page, or column of data. So when a query is executed, the database can check to see if any of the sought values are on the data page.

“If the page is not in-memory, we don’t have to read it into memory. If it is in-memory, we don’t have to bring it through the bus to the CPU and burn CPU cycles analysing all the values on the page,” Vincent said. “That allows us to be much more efficient on our CPU utilisation and bandwidth.”

With columnar processing, a query can pull in just the selected columns of a database table, rather than all the rows, which would consume more memory. “We’ve come up with an algorithm that is very efficient in determining which columns and which ranges of columns you’d want to cache in-memory,” Vincent said.

On the hardware side, the software comes with parallel vector processing capabilities, a way of issuing a single instruction to multiple processors using the SIMD (Single Instruction Multiple Data) instruction set available on Intel and PowerPC chips. The software can then run a single query against as many columns as the system can place on a register. “The register is the most efficient memory utilisation aspect of the system,” Vincent said.

IBM is not alone in investigating new ways of cramming large databases into the server memory. Last week, Microsoft announced that its SQL Server 2014 would also come with a number of techniques, collectively called Hekaton, to maximise the use of working memory, as well as a columnar processing technique borrowed from Excel’s PowerPivot technology.

Database analyst Curt Monash, of Monash Research, has noted that with IBM’s DB2 10.5 release, Oracle now is “now the only major relational DBMS vendor left without a true columnar story.”

IBM itself is using the BLU components of DB2 10.5 as a cornerstone for its DB2 SmartCloud infrastructure as a service (IaaS), to add computational heft for data reporting and analysis jobs. It may also insert the BLU technologies into other IBM data store and analysis products, such as Informix.